Google has introduced a groundbreaking AI agent named Project Astra. In addition, the design of the AI agent will interact with users through text, audio, and video inputs in real time. Google released a new demonstration video. Additionally, it showcases engaging in real-time conversations with users. As a result, it mirrors the capabilities previously demonstrated by OpenAI’s GPT-4o model.

Unprecedented Capabilities of Project Astra by Google

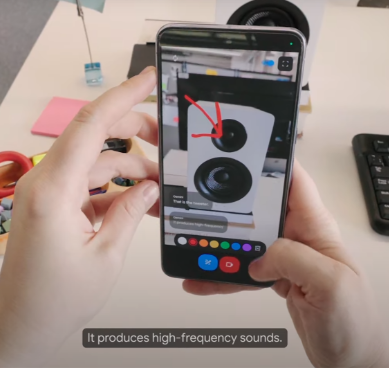

The new AI assistant from Google has demonstrated exceptional abilities. In addition, it includes the identification of objects within a room, the recognition and explanation of specific code segments, and the ability to determine its precise location by observing the surroundings through a window. Additionally, it can even have the skill to locate the user’s glasses.

Additionally, Project Astra exhibited creativity by suggesting unique names for a dog. Furthermore, Google hinted at the potential integration of Project Astra with smartphones or smart glasses. Thus, it will imply a significant enhancement to Google Lens through the Gemini platform in the future.

Advanced Processing and Audio Enhancements

According to Google, Project Astra leverages advanced techniques to process information rapidly. In addition, it includes encoding video frames, integrating video and speech inputs into a timeline of events, and caching this data for future reference. Moreover, Google has enhanced the audio of the new AI assistant. As a result, it makes it sound more natural while offering users the option to switch between different voices.

The Vision of an Autonomous AI Agent by Google

In discussing the necessity of an autonomous AI agent, Google DeepMind CEO Demis Hassabis emphasized the importance of the agent’s ability to comprehend. In addition, it focuses on responding to the intricate and evolving world, akin to human understanding. This includes the agent’s capacity to perceive and remember visual and auditory input to comprehend context and take appropriate action. Hassabis also highlighted the importance of the agent being proactive, teachable, and personal. As a result, it allows users to interact with it naturally and without delays.

Also Read: https://thecitizenscoop.com/openai-introduces-new-language-model/

Future Integration and Availability

In the coming months, Google plans to integrate Project Astra’s capabilities into various Google products, including the Gemini app through the Gemini Live interface.