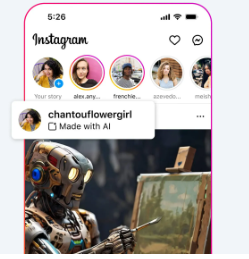

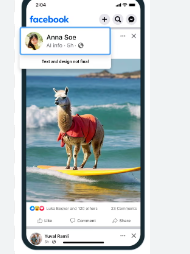

Meta has recently announced a significant update regarding the labeling of AI-generated content on its apps. This decision comes in response to public concerns about the inaccurate application of the “Made with AI” tag to certain images.

Notably, former White House photographer Pete Souza highlighted an instance. In addition, the tag appeared on a photo captured on film during a basketball game four decades ago. Thus, it suggests using Adobe’s cropping tool and image flattening may have triggered the label.

Kate McLaughlin, a spokesperson for Meta, emphasized the company’s ongoing efforts to enhance its AI products and collaborate with industry partners to refine the approach to AI labeling. The new ‘AI info’ label aims to more precisely convey that the user may have modified the content rather than implying that it is entirely AI-generated.

Challenges with Image Meta and AI Labeling

The underlying issue appears to stem from the metadata tools employed by platforms such as Adobe Photoshop. In addition, how different platforms interpret these tools. The Meta AI labeling policy expanded to include real-life images posted to Instagram, Facebook, and Threads as “Made with AI.”

Rollout of the New Meta Labeling System

The updated labeling system will debut on mobile apps initially, with a subsequent rollout to web platforms. According to McLaughlin, this implementation is currently underway across all surfaces.

Enhanced Information Accessibility

Upon clicking the new ‘AI info’ tag, users will encounter a message similar to the previous label. Thus, the document will explain the reasons for its application. The message clarifies that the label could encompass images entirely generated by AI or those edited with AI-enabled tools such as Generative Fill. The purpose of metadata tagging technologies like C2PA was to facilitate the differentiation between AI-generated and real images. However, the anticipated advancements in this area have yet to materialize.