In order to make its AI chatbot experience safer, Snapchat is introducing some new tool, such as an age-appropriate filter and information for parents.

A Washington Post investigation revealed that the GPT-powered chatbot Snapchat released for Snapchat+ subscribers was responding in an unsafe and unsuitable way.

The Need for New Tools

The social media behemoth claimed that following the chatbot’s debut, it was discovered that some users were attempting to “trick the chatbot into providing responses that do not conform to our guidelines. Therefore, Snapchat is introducing a few tools to control AI replies.

How will the new tool work?

Snap has added a new age filter. In addition, it allows AI to determine the users’ birthdates. Additionally, it provides them with responses that are suitable for their age. According to the business, the chatbot will “consistently take [users’] age into consideration.”

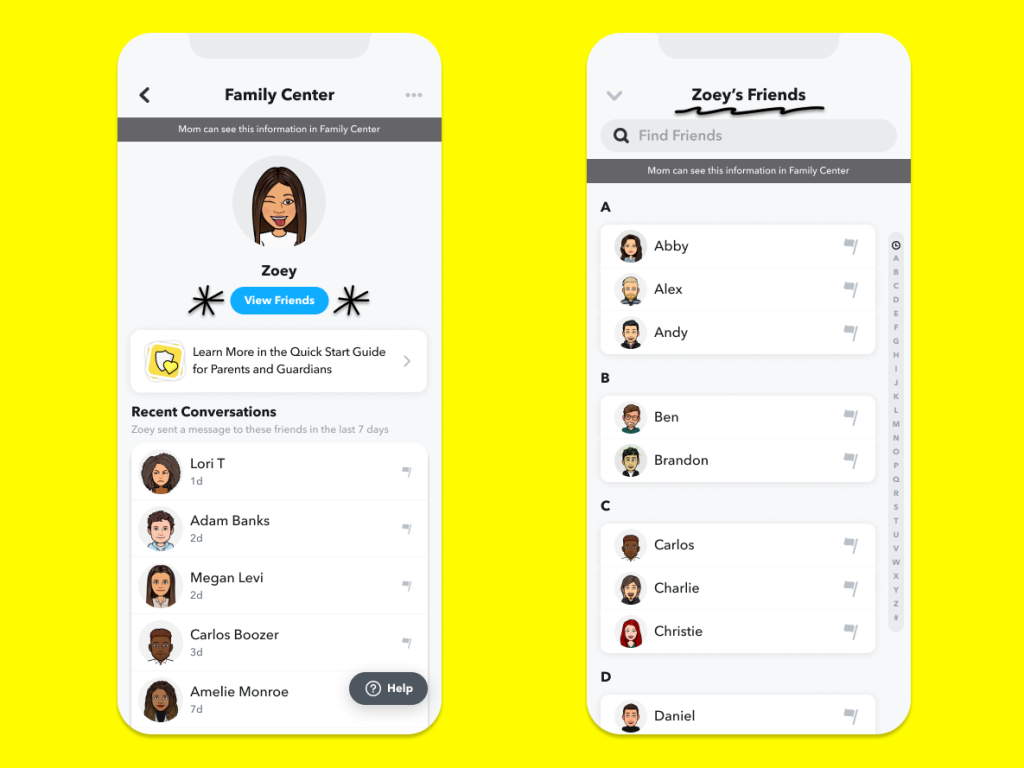

Additionally, Snap intends to give parents or other adults more information. The information includes how their children engage with the bot in the Family Center. This was introduced last August and in the upcoming weeks.

The new feature will reveal whether their teenagers are interacting with the AI. In order to use these parental control features, both the adult and the adolescent must agree to use Family Center.

Snap clarified in a blog entry that the My AI chatbot is not a “real friend.” Additionally, it uses the conversation past to enhance responses. When users begin a conversation with the bot, they are also informed about data retention.

According to the business, the bot only provided “non-conforming” language responses in 0.01% of its responses.

Snap classifies as “non-conforming” any comment that makes mention of violence, sexually explicit language, illegal drug use, child sexual abuse, bullying, hate speech, disparaging or biased remarks, racism, sexism, or the marginalization of underrepresented groups.

The social network noted that in the majority of these instances, these inappropriate answers came from repeating what other users had said.

It also mentioned that the business will momentarily deny access to an AI bot to a customer who is misusing the service.

Closing Note

“We’ll keep applying these lessons to enhance My AI. We can implement a new system to prevent misuse of My AI with the aid of this data as well.

In order to assess the severity of potentially harmful content and briefly revoke Snapchatters’ access to My AI if they abuse the service, we are integrating OpenAI’s moderation technology into our current toolkit, according to Snap.

Many people are worried about their safety and privacy as AI-powered tools proliferate quickly.

The FTC was urged to halt the deployment of OpenAI’s GPT-4 technology last week in a letter from the Center for Artificial Intelligence and Digital Policy, which claims that the startup’s technology is “biased, deceptive, and a risk to privacy and public safety.”