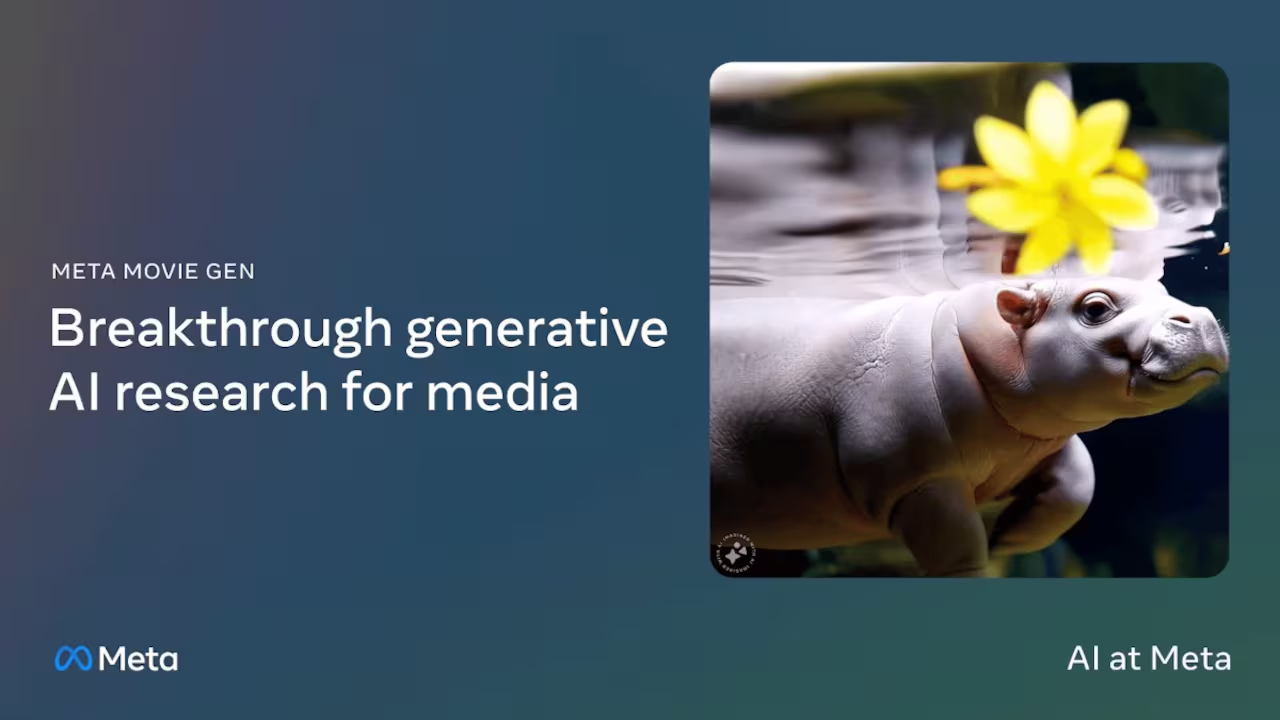

Meta introduced Movie Gen, an AI-powered text-to-video and sound generator. In addition, it allows users to create and edit videos based on text inputs. This innovative tool enables users to transform photos into videos and generate or extend soundtracks based on prompts. As a result, it will position itself alongside leading media generation platforms like OpenAI’s Sora.

Mission of Meta With New Feature

Meta’s mission with Movie Gen is to democratize creativity. Thus, it will ensure both aspiring filmmakers and casual content creators. In addition, they will have access to tools that enhance their creative expression. Their research indicates Movie Gen allows users to produce custom videos and sounds using simple text inputs. As a result, it outperforms other industry models in tests.

This tool is part of Meta’s ongoing commitment to sharing AI research with the public. It builds on previous initiatives like the “Make-A-Scene” series, which enabled users to create images, audio, video, and 3D animations. Meta has reached a new milestone with Movie Gen, merging multiple modalities to give users unprecedented control over their creative outputs. Importantly, Meta emphasizes that generative AI offers exciting possibilities. However, it provides an opportunity for artists and animators to enhance their skills rather than replace them.

Key Features of Movie Gen

Video Generation: Utilizing a 30B parameter transformer model, Movie Gen can generate videos up to 16 seconds long at 16 frames per second. It effectively integrates text-to-image and text-to-video techniques. Additionally, it manages object motion, subject interactions, and camera movements with precision.

Personalized Video Generation: The tool can create personalized videos from an individual’s image using text prompts. Thus, it excels at preserving human identity and motion.

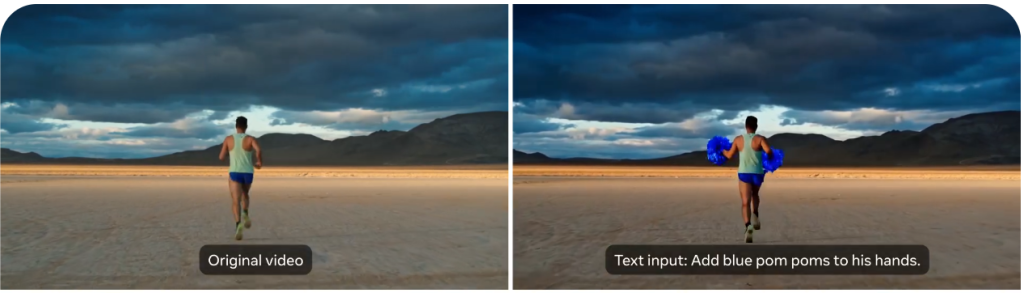

Precise Video Editing: Users can edit videos with high accuracy, allowing localized edits (like adding or removing elements) and global edits (such as changing backgrounds or styles). Additionally, it will not compromise the overall content.

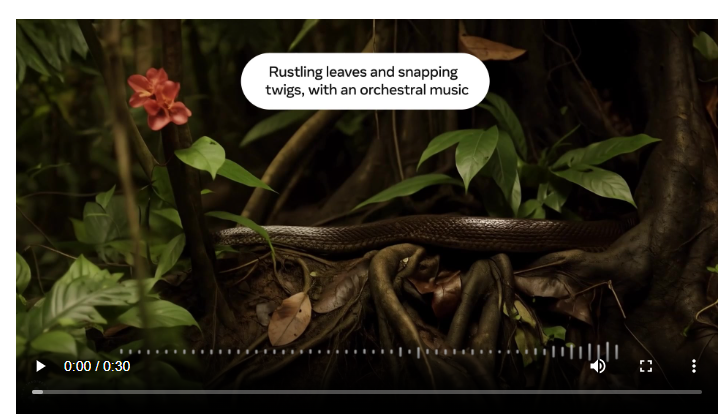

Audio Generation: A 13B parameter model generates audio up to 45 seconds long. In addition, it includes sound effects, background music, and ambient sounds, all synced with the video. An audio extension feature allows for coherent sound generation for longer videos.

Results and Innovations by Meta

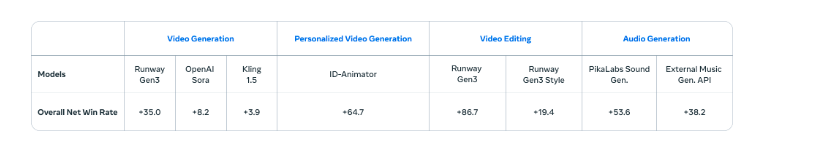

Meta’s foundation models have led to significant technical innovations in architecture, training methods, and evaluation protocols. Human evaluators have consistently preferred Movie Gen over other industry alternatives across its four main capabilities. Meta has also published a detailed 92-page research paper outlining the technical insights behind Movie Gen.

Despite its promising potential, Meta acknowledges limitations, such as long generation times and the need for further optimization. The company is actively working on these aspects as development continues.

Also Read: https://thecitizenscoop.com/google-gemini-nano-is-now-available-on-android/

Meta plans to collaborate with filmmakers and creators to refine Movie Gen based on user feedback. Thus, it will envision a future where users can create personalized videos, share content on platforms like Reels, or generate custom animations for apps like WhatsApp.