Meta announces LLaMa 2, a new family of AI models. In addition, it intends to power chatbots like OpenAI’s ChatGPT, Bing Chat, and other contemporary chatbots. Meta asserts that LLaMa 2 outperforms the LLaMa models from the previous generation based on a variety of publicly available data.

About LLaMa by Meta

The successor of LLaMa is a group of models that could produce text and code in response to commands. In addition, it can be akin to other chatbot-like systems, LLaMa 2. However, LLaMa was only accessible upon request. Out of concern for abuse, Meta chose to control access to the models. Despite these precautions, LLaMa later slipped online and propagated among many AI communities.

About LLaMa 2

On the other hand, LLaMa 2 will be available for fine-tuning on Hugging Face’s AI model hosting platform. In addition, it includes AWS, Azure, and for free for research and commercial usage.

Additionally, Meta claims that it will be simpler to use because it is Windows-optimized. This is due to the strengthened cooperation with Microsoft and Qualcomm’s Snapdragon-equipped smartphones and computers. According to Qualcomm, LLaMa 2 will be available on Snapdragon-powered devices in 2024.

Availability of New Model of Meta

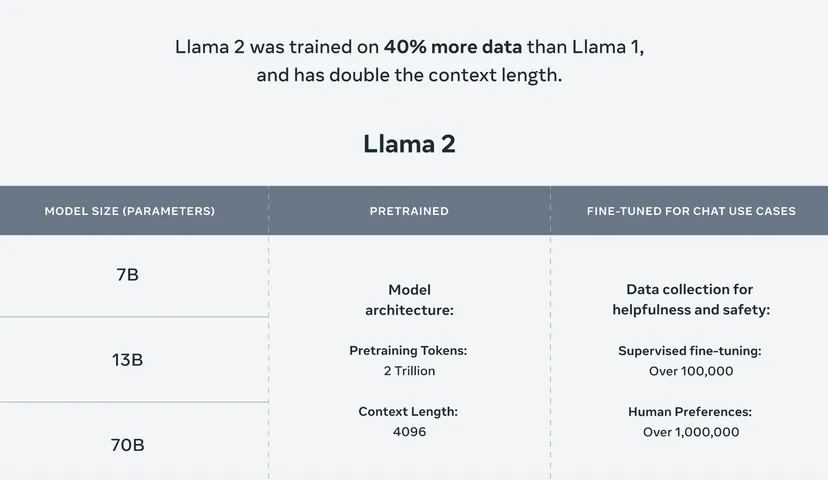

LLaMa 2 is available in LLaMa 2 and LLaMa 2-Chat varieties. In addition, the latter of being adapted for two-way talks. There are three more levels of sophistication available for LLaMa 2 and LLaMa 2-Chat: 7 billion parameters, 13 billion parameters, and 70 billion parameters. “Parameters” are the components of a model acquired from training data and fundamentally define the model’s competence on a problem.

Closing Note

Two million tokens help to train LLaMa 2. In addition, the token is a piece of raw text, such as “fan,” “tas,” and “tic” for the word “fantastic.” That is almost twice as many tokens as LLaMa learned (1.4 trillion). Additionally, more tokens are generally better for generative AI. It seems that GPT-4 learned on trillions of tokens, just like Google’s current large language model (LLM), PaLM 2. It runs on 3.6 million tokens.

Also Read: https://thecitizenscoop.com/twitter-is-testing-on-new-publishing-tool-for-long-articles/