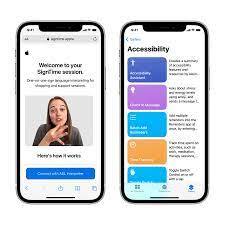

Apple Inc. unveiled the device features for accessibility in the areas of cognition, vision, hearing, and mobility. With its mission to create products for everyone. The IT giant also unveiled ground-breaking solutions for those who are mute or in danger of losing their ability to talk.

To make it easier and more independent for everyone to use an iPhone or iPad, Apple Inc. has created accessibility features.

Among the features are Assistive Access for those with cognitive impairments, Live Speech for those who are mute, and Detection Mode for those with low vision or vision impairments.

About the Feature of Apple

Assistive Access:

Apple introduced Assistive Access to reduce cognitive strain when using an iPhone or iPad. This feature is in response to feedback from people with cognitive problems and reliable companions.

Along with Messages, Cameras, Photos, and Music. In addition, it includes a customized user experience for Phone and FaceTime. Additionally, it combines into a single Calls application. The function offers a distinctive user interface with boldly contrasted buttons and large text labels.

According to Katy Schmid, senior director of National Program Initiatives at The Arc of the United States, “the intellectual and developmental disability community is bursting with creativity.” However, technology often poses physical, visual, or knowledge barriers for these individuals.”

Live Speech and Personal Voice: Apple Feature

For people who are unable to talk, Apple Inc. has created Live Speech. People can use this function to type their phone call messages. In order to answer swiftly to family and friend interactions. In addition, users can also save frequently used phrases.

Personal Voice can use to generate a voice that sounds like the person who is at risk of losing their capacity to speak. Users only need to record a 15-minute voice sample on an iPhone or iPad. Then, a feature will utilize machine learning to integrate that speech and safeguard the data.

Point and Speak:

The Magnifier tool’s Point and Speak feature makes it easier for those with vision impairments. They can engage with real-world objects with multiple text labels.

Users can point to an object. In addition, the system will vocally convey the matching text labels connected with it. Thus, it simplifies the process of recognizing and retrieving the needed information.

For instance, the Point and Speak function uses a combination of inputs from the Camera app, the LiDAR Scanner, and on-device machine learning.

Also Read: https://thecitizenscoop.com/spotify-unveiled-a-new-dj-feature/

As users move their fingertips across the keypad, the system can use this combination to loudly announce the text shown on each button. Thus, it facilitates user interaction.